Hello Sharepoint developers who use Camlex in the work. I'm glad to announce that starting with version 5.4 Camlex will use MIT license. Before that Camlex was distributed under Ms-Pl license but nowdays MIT became standard for open source projects as most permissive license (e.g. PnP.Framework also uses MIT license). In order to be inline with the trend I changed Camlex license to MIT. New nuget packages with 5.4 version for basic object model version and CSOM version are already available for download.

Thursday, December 16, 2021

Camlex 5.4 release: switch to MIT license

Wednesday, December 1, 2021

How to run continuous Azure web job as singleton

Continuous Azure web jobs may be used as subscribers to some external events (e.g. new item has been added to Azure storage queue). In opposite to scheduled based web jobs which are running by scheduler (you need to specify CRON expression for them) continuous web jobs are always running and react on events they are subscribed to.

When you scale your Azure app service on multiple instances (web jobs may run on different VMs in background) by default web jobs are also scaled i.e. they will run on all instances. However it is possible to change this behavior and run continuous web job as singleton only on 1 instance.

When create continuous web job in Azure portal there is Scale field which is by default set to Multi instance:

As tooltip says:

Multi-instance will scale your WebJob across all instances of your App Service plan, single instance will only keep a single copy of your WebJob running regardless of App Service plan instance count.

So during creation of web job we may set Scale = Single instance and Azure will create it as singleton.

If you don't want to rely on this setting which can be changed from UI you may add settings.job file with the following content:

{ "is_singleton": true }

to the root folder of your web job (the same folder which contains executable file of web job). In this case Azure will create web job as singleton even if Scale = Multi instance select is selected in UI. I.e. settings.job has priority over UI setting.

If you will check logs of continuous web job which was created using above method you should see something like that:

Status changed to Starting

WebJob singleton settings is True

WebJob singleton lock is acquired

Which will prove that job runs as singleton.

Thursday, November 25, 2021

Sharepoint Online remote event receivers attached with Add-PnPEventReceiver depend on used authentication method

Today I have faced with another strange problem related with SPO remote event receivers (wrote about another strange problem here: Strange problem with remote event receivers not firing in Sharepoint Online sites which urls/titles ends with digits). I attach remote event receiver using Add-PnPEventReceiver cmdlet like that:

Connect-PnPOnline -Url ... $list = Get-PnPList "MyList" Add-PnPEventReceiver -List $list.Id -Name TestEventReceiver -Url ... -EventReceiverType ItemUpdated -Synchronization Synchronous

In most cases RER is attached without any errors. But the question will it be fired after that. As it turned out it depends how exactly you connect to the parent SPO site with Connect-PnPOnline: remote event receiver is attached successfully in all cases (you may see them in SPO client browser) but in some cases they won't be triggered. In fact I found that they are triggered only if you connect with "UseWebLogin" parameter, while in all other cases they are not. In the below table I summarized all methods which I tried:

| # | Method | Is RER fired? |

| 1 |

Connect-PnPOnline -Url ... -ClientId {clientId}-Interactive |

No |

| 2 | Connect-PnPOnline -Url ... -ClientId {clientId} | No |

| 3 | Connect-PnPOnline -Url ... |

No |

| 4 | Connect-PnPOnline -Url ... -UseWebLogin |

Yes |

Monday, November 22, 2021

Strange problem with remote event receivers not firing in Sharepoint Online sites which urls/titles ends with digits

Some time ago I wrote about Sharepoint Online remote event receivers (RERs) and how to use Azure functions with them: Use Azure function as remote event receiver for Sharepoint Online list and debug it locally with ngrok. When I tested RERs on another SPO sites I've faced with very strange problem: on those sites which urls/titles end with digits remote event receivers were not fired. E.g. I often create modern Team/Communication sites with datetime stamp at the end:

https://{tenant}.sharepoint.com/sites/{Prefix}{yyyyMMddHHmm}

e.g.

https://{tenant}.sharepoint.com/sites/Test202111221800

I noticed that on such sites RER was not called because of some reason. I've used the same PowerShell script for attaching RER to SPO site as described in above article and the same Azure function app running locally with the same ports and ngrok tunneling.

After I created site without digits at the end (https://{tenant}.sharepoint.com/sites/test) - RER started to work (without restarting AF or ngrok - i.e. I used the same running instances of AF/ngrok for all tests which ran all the time). Didn't find any explanation of this problem so far. If you faced with this issue and know the reason please share it.

Friday, November 12, 2021

Fix Azure functions error "Repository has more than 10 non-decryptable secrets backups (host)"

Today I've faced with strange issue: after update of Azure function app which was made from Visual Studio Azure functions stopped working. The following error was shown in the logs:

Repository has more than 10 non-decryptable secrets backups (host)

In order to fix this error perform the following steps:

1. In Azure portal go to Azure function app > Deployment center > FTPS credentials and copy credentials for connecting to function app by FTP:

2. Then connect to Azure function app by FTP e.g. using WinSCP client.

3. Go to /data/functions/secrets folder and remove all files which have name in the following form:

*.snapshot.{timestamp}.json

4. After that go to Azure portal > Function app and restart it. It should be started now.

Wednesday, November 3, 2021

How to configure number of retries for processing failed Azure queue message before it will be moved to poison queue

In my previous post I showed how to configure Azure web job host to set number of queue messages which may be processed simultaneously (in parallel): How to set limit on simultaneously processed queue messages with continuous Azure web job. In this post I will show how to configure web job host for processing failed messages.

As it was mentioned in previous post when message is added to Azure queue runtime will call method with 1st parameter decorated with [QueueTrigger] attribute. If there was unhandled exception this message will be marked as failed. Azure runtime will try to process this message again - by default there are maximum 5 retries for failed messages.

If we don't need to make 5 attempts (e.g. if our logic requires only 1 attempt) we may change this behavior using the following code:

public virtual void RunContinuous()

{

var storageConn = ConfigurationManager.ConnectionStrings["AzureWebJobsDashboard"].ConnectionString;

var config = new JobHostConfiguration();

config.Queues.MaxDequeueCount = 1;

var host = new JobHost(config);

host.RunAndBlock();

}

public void ProcessQueueMessage([QueueTrigger("orders")]string message, TextWriter log)

{

log.WriteLine("New message has arrived from queue");

...

}

I.e. we set config.Queues.MaxDequeueCount to 1. In this case failed message will be moved to poison queue immediately and web job won't try to process it again.

Tuesday, November 2, 2021

How to set limit on simultaneously processed queue messages with continuous Azure web job

With continuous Azure web job we may create web job host which will listen to specified Azure storage queue. When new message will be added to queue Azure will trigger handler method found in assembly of continuous web job (public method which has parameter with [QueueTrigger] attribute):

public virtual void RunContinuous()

{

var storageConn = ConfigurationManager.ConnectionStrings["AzureWebJobsDashboard"].ConnectionString;

var config = new JobHostConfiguration();

config.StorageConnectionString = storageConn;

var host = new JobHost(config);

host.RunAndBlock();

}

public void ProcessQueueMessage([QueueTrigger("orders")]string message, TextWriter log)

{

log.WriteLine("New message has arrived from queue");

...

}

If several messages were added simultaneously Azure will trigger several instances of handler method in parallel. Number of messages which can be processed in parallel can be configured via JobHostConfiguration.Queues.BatchSize property. By default it is set to 16 i.e. by default 16 messages can be processed simultaneously in one batch:

If we want e.g. configure continuous web job so it will process only 1 message at time we can set BatchSize to 1:

public virtual void RunContinuous()

{

var storageConn = ConfigurationManager.ConnectionStrings["AzureWebJobsDashboard"].ConnectionString;

var config = new JobHostConfiguration();

config.StorageConnectionString = storageConn;

// set batch size to 1

config.Queues.BatchSize = 1;

var host = new JobHost(config);

host.RunAndBlock();

}

public void ProcessQueueMessage([QueueTrigger("orders")]string message, TextWriter log)

{

log.WriteLine("New message has arrived from queue");

...

}

Using the same approach we can increase BatchSize from default 16 to bigger value. However as you may notice from above screenshot MaxBatchSize is set to 32 to you may increase BatchSize only up to 32.

Monday, October 18, 2021

Obfuscar + NVelocity + anonymous types = issue

Some time ago I faced with interesting issue related with Obfuscar and anonymous types used with NVelocity template engine. If you use NVelocity for populating templates then your code may look like this:

string Merge(string templateText, params Tuple<string, object>[] args)

{

var context = new VelocityContext();

foreach (var t in args)

{

context.Put(t.Item1, t.Item2);

}

var velocity = new VelocityEngine();

var props = new ExtendedProperties();

velocity.Init(props);

using (var sw = new StringWriter())

{

velocity.Evaluate(context, sw, "", templateText);

sw.Flush();

return sw.ToString();

}

}

Here we pass templateText itself and tuple(s) of (key, value) pairs where key is string name of parameter used inside template (like $p) and value is object which has to be used for expanding this parameter to the real string value in resulting text (e.g. if you have "$p.Foo" inside template then pass object which has "Foo" string property set to "bar" value - then "$p.Foo" in template will be replaced by "bar").

For objects passed as parameters into this method anonymous types can be used - it is very convenient:

string text = Merge("My object $p.Foo", Tuple.Create("p", new { Foo = "bar" }));

If you will run this code as is it will return "My object foo" which is correct. But if you will try to obfuscate your code with Obfuscar then you will get unexpected result: instead of expanded template you will get original template text "My object $p.Foo" i.e. template won't be expanded to string.

The reason of this problem is that Obfuscar by default removes properties of anonymous types - there are issues about that: Anonymous class obfuscation removes all the properties or Anonymous Types. In order to solve the problem you need to instruct Obfuscar to not obfuscate anonymous types:

<Module file="MyAssembly.dll"> <SkipType name="*AnonymousType*" skipProperties="true" skipMethods="true" skipFields="true" skipEvents="true" skipStringHiding="true" /> </Module>

After that anonymous types won't be changed and NVelocity templates will be populated to string correctly.

Friday, October 15, 2021

Convert self-signed SSL certificate's private key from .pfx to .key and .crt

Some time ago I wrote a post how to use self-signed SSL certificate in Azure App service: Use self-signed SSL certificate for web API hosted in Azure App service. In this article we generated self-signed certificate, exported private key to pfx file and used it in Azure. But what it you will use another hosting provider which require private key in .key format and SSL certificate itself in .crt file? Good news is that it is possible to create .key/.crt files from .pfx and in this article I will show how to do that.

We will need openssl.exe tool which will make actual work. If you will try to Google it there will be plenty of 3rt party sites where you may download that. The problem however is that there is no guarantee that these are safe download links (i.e. that there won't be malwares and viruses). Safer option is to use openssl.exe which is shipped with git client for Windows: I found my in the following location:

C:\Program Files\Git\usr\bin\openssl.exe

With this tool in order to export SSL certificate from .pfx to .crt we may use the following command:

openssl pkcs12 -in myCert.pfx -clcerts -nokeys -out myCert.crt

and in order to export private key to .key format from .pfx the following:

openssl pkcs12 -in myCert.pfx -nocerts -out myCert-encrypted.key

Here you will need to specify pfx password and also provide so called PEM pass phrase which will proteck .key file. I.e. private key in .key format will be still encrypted. If you will need it in unencrypted format (ensure that it will be stored in safe location in this case) use the following command:

openssl rsa -in myCert-encrypted.key -out myCert-decrypted.key

After that you will be able to use self-signed SSL certificate in .key/.crt format.

If you need certificate in.pem format you may get it by concatenating myCert-decrypted.key and myCert.crt files - resulting .pem file should have the following parts (first is copied from myCert-decrypted.key and second from myCert.crt):

-----BEGIN RSA PRIVATE KEY-----

...

-----END RSA PRIVATE KEY-----

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

So if your hosting provider requires .pem file you will be able to use it there.

Monday, October 11, 2021

One reason for Connect-SPOService error: The sign-in name or password does not match one in the Microsoft account system

Today we have faced with interesting issue. When tried to use Connect-SPOService cmdlet on one tenant we got the following error:

The sign-in name or password does not match one in the Microsoft account system

Interesting that on other tenants this cmdlet worked properly. Troubleshooting and searching showed that one of the reason of this error can be enabled MFA (more specifically, when Connect-SPOService is used with -Credentials param). However we double checked that for those accounts for which this error was shown MFA was disabled.

Then we tried to login to O365 site with this account in browser and my attention was attracted by the following message which was shown after successful login:

Microsoft has enabled security defaults to keep your account secure:

As it turned out on this tenant AAD Security defaults were enabled which forced MFA for all users. In turn it caused mentioned error with Connect-SPOService. Solution was to disable security defaults in AAD properties:

After that error disappeared and we were able to use SPO cmdlets.

Friday, October 8, 2021

Fix Azure web job startup error "Storage account connection string 'AzureWebJobsStorage' does not exist"

If you develop continuous Azure web job which monitors e.g. Azure storage queue messages then your code may look like this (in our example we will use latest v3 version of Microsoft.Azure.WebJobs):

var builder = new HostBuilder();

static void Main(string[] args)

{

var builder = new HostBuilder();

builder.UseEnvironment("development");

builder.ConfigureWebJobs(b =>

{

b.AddAzureStorageCoreServices();

b.AddAzureStorage();

});

builder.ConfigureLogging((context, b) =>

{

b.AddConsole();

});

var host = builder.Build();

using (host)

{

host.Run();

}

}

public static void ProcessQueueMessage([QueueTrigger("myqueue")] string message, TextWriter log)

{

...

}

Here in Main method (which is entry point for continuous web job) we create host which subscribes on specified queue. When new message will be added to the queue it should trigger our ProcessQueueMessage handler. However on startup you may get the following error:

Storage account connection string 'AzureWebJobsStorage' does not exist

This error is thrown when host can't find connection string AzureWebJobsStorage of Azure storage where specified queue is located. In order to avoid this error we need to create appsettings.json file in our project which looks like this:

{

"ConnectionStrings": {

"AzureWebJobsStorage": "..."

}

}

Also in file's properties we need to set Copy to output directory = Copy always so it will be always copied in output directory with exe file. After that error should disappear and web job should be successfully started.

Friday, October 1, 2021

Use self-signed SSL certificate for web API hosted in Azure App service

Suppose that we develop web API (e.g. https://api.example.com) and want to use self-signed certificate for it's domain name. Since it is web API we usually don't worry a lot about errors or warning which browser may show when you try to access it by url there. At the same time we don't want to compromise security and thus want to allow only our SSL certificate with known hash/thumbprint.

First of all we need to create self-signed certificate for domain name which will be used for web API (it should contain CN=api.example.com otherwise it won't be possible to bind it to custom domain name in Azure App service). Also certificate should be created with exportable private key (pfx) so we can use it in Azure. It can be done by the following PowerShell:

$start = [System.DateTime]::Now.AddDays(-1)

$end = $start.AddYears(2)

New-SelfSignedCertificate `

-FriendlyName "api.example.com" `

-KeyFriendlyName "api.example.com" `

-KeyAlgorithm "RSA" `

-DnsName "api.example.com" `

-NotBefore $start `

-NotAfter $end `

-KeyUsage CertSign, CRLSign, DataEncipherment, DigitalSignature, NonRepudiation `

-KeyUsageProperty All `

-KeyLength 2048 `

-CertStoreLocation cert:\LocalMachine\My `

-KeyExportPolicy Exportable

$cert = Get-ChildItem -Path Cert:\LocalMachine\my | where-object { $_.Subject -eq "CN=api.example.com" }

$password = Read-Host -Prompt "Enter Password to protect private key" -AsSecureString

Export-PfxCertificate -Cert $cert -Password $password -FilePath api.example.com.pfx

Here we first create certificate itself and then export it's private key to file system.

Next step is to add custom domain name api.example.com to Azure app service where we will host our API. First of all we need to ensure that current pricing tier supports "Custom domains / SSL" feature. Currently minimal pricing tier with this option is B1 (it is not free):

Then we go to App service > Custom domains > Add custom domain and specify desired domain name for our web API:

Before Azure will allow to use custom domain name we will need to prove hostname ownership by adding TXT and CNAME DNS records for specified domain name - it is done in hosting provider control panel (here are detailed instructions of the whole process: Map an existing custom DNS name to Azure App Service).

Last part is related with client which call our web API. Since web API uses self-signed certificate attempt to call it from C# using HttpClient will fail. We need to configure it to allow usage of our SSL certificate but at the same time don't allow to use other self-signed SSL certificates. It can be done with the following C# code:

// thumprint of self-signed SSL certificate

private const string CERT_THUMBRINT = "...";

ServicePointManager.ServerCertificateValidationCallback = validateServerCertficate;

...

private static bool validateServerCertficate(

object sender,

X509Certificate cert,

X509Chain chain,

SslPolicyErrors sslPolicyErrors)

{

if (sslPolicyErrors == SslPolicyErrors.None)

{

// Good certificate.

return true;

}

return string.Compare(cert.GetCertHashString(), CERT_THUMBRINT, true) == 0;

}

In this code we instruct ServicePointManager to allow only our self-signed certificate (if error occurred during checking of SSL certificate when connection to remote host is established). Those it won't allow connections to hosts which use other self-signed SSL certificates.

Update 2021-10-15: see also post Convert self-signed SSL certificate's private key from .pfx to .key and .crt which describes how to export private key of self-signed certificate from pfx to key/crt.

Thursday, September 30, 2021

Speaking on online IT community event

Today I was speaking on online IT community event about development and debugging of Azure functions: https://tulaitcommunity.ru. On this event I wanted to show how to efficiently develop Azure functions and use them in Sharepoint Online. Development of Azure functions for Sharepoint Online require skills and experience of usage of many tools like Visual Studio, Postman, ngrok, PnP PowerShell, SPO browser. They were used in my demo. Presentation from speech is available from Slideshare here: https://www.slideshare.net/sadomovalex/azure-sharepoint-online. Recording from online meeting is available on youtube (on Russian language):

Hope that it was interesting :)

Wednesday, September 1, 2021

List and delete Azure web jobs using KUDU Web Jobs REST API

Some time ago I wrote how to remove Azure web jobs using AzureRM PowerShell module: How to remove Azure web job via Azure RM PowerShell. However since that time AzureRM was discontinued. MS recommended to switch to new Az module instead. The problem that if you will follow AzureRM migration guide then the same code won't work anymore. The main problem is that new Get-AzResource cmdlet doesn't return resources with type microsoft.web/sites/triggeredwebjobs (although it still returns other Azure resources like App services, Azure functions, Storage accounts, KeyVaults, SSL certificates). It means that you also can't use Remove-AzResource cmdlet because there won't be resource name which you may pass there. For now I submitted issue to Azure Powershell github about that: Get-AzResource doesn't return web jobs. But let's see how we may workaround that until MS will fix it.

There is another open issue in Azure PowerShell github ARM :: WebApp Web Job Cmdlets which reports about lack of cmdlets for managing web jobs. It was created in 2015 and is still opened. One suggestion there is to use az cli tool. However it is not always possible (e.g. if you run your PowerShell in runbook or can't add this dependency to your script).

Fortunately there is KUDU Web Job REST API which may be utilized for this purpos. In order to list available web jobs we may use the following code:

$token = Get-AzAccessToken

$headers = @{ "Authorization" = "Bearer $($token.Token)" }

$userAgent = "powershell/1.0"

Invoke-RestMethod -Uri "https://{appServiceName}.scm.azurewebsites.net/api/triggeredwebjobs" -Headers $headers -UserAgent $userAgent -Method GET

It will return list of all existing (in our example triggered) web jobs in specific App service. In order to delete web job we need to send HTTP DELETE request to web job's endpoint:

$token = Get-AzAccessToken

Invoke-RestMethod -Uri "https://{appServiceName}.scm.azurewebsites.net/api/triggeredwebjobs/{webjobName}" -Headers $headers -UserAgent $userAgent -Method DELETE

There are other commands as well (like run job, list running history and others). You may check them in REST API documentation using link posted above.

Thursday, August 26, 2021

Camlex.Client 5.0 released: support for .NET 5.0 and .NETStandard 2.0 target frameworks

Good news for Sharepoint developers who use Camlex library: today new version of Camlex.Client 5.0.0 has been released. In this version native support for .NET 5.0 and .NET Standard 2.0 target frameworks was added. .NET Framework 4.5 is also still there for backward compatibility.

With this new version you may use Camlex.Client in your .NET 5.0 or .NET Core 3.1/2.1 in native mode. I.e. there won't be warning anymore that Camlex is referenced using .NET Framework compatibility mode. Each Camlex.Client nuget package now has assemblies for 3 target frameworks:

- .NET Framework 4.5

- .NET Standard 2.0

- .NET 5.0

which should cover most of development needs. The following table shows examples which application will use which Camlex.Client version depending on its target framework:

| App's target framework | Camlex.Client target framework |

| .NET Framework 4.5 | .NET Framework 4.5 |

| .NET Framework 4.6.1 | .NET Framework 4.5 |

| .NET Framework 4.7.2 | .NET Framework 4.5 |

| .NET Core 2.1 | .NET Standard 2.0 |

| .NET Core 3.1 | .NET Standard 2.0 |

| .NET 5.0 | .NET 5.0 |

All Camlex.Client nuget packages has been updated with this change:

- Camlex.Client.dll for SP Online

- Camlex.Client.2013 for SP2013

- Camlex.Client.2016 for SP2013

- Camlex.Client.2019 for SP2013

Thursday, August 19, 2021

Method not found error in UnifiedGroupsUtility.ListUnifiedGroups() when use PnP.Framework 1.6.0 with newer version of Microsoft.Graph

Today I've faced with interesting problem: in our .Net Framework 4.7.2 project we use PnP.Framework 1.6.0 (latest stable release currently) which was built with Microsoft.Graph 3.33.0. Then I updated Microsoft.Graph to the latest 4.3.0 version and after that UnifiedGroupsUtility.ListUnifiedGroups() method from PnP.Framework started to throw the following error:

Method not found: 'System.Threading.Tasks.Task`1<Microsoft.Graph.IGraphServiceGroupsCollectionPage> Microsoft.Graph.IGraphServiceGroupsCollectionRequest.GetAsync()'.

When I analyzed the error I found the following: Microsoft.Graph 3.33.0 contains IGraphServiceGroupsCollectionPage interface which has the following method:

In Microsoft.Graph 4.3.0 signature of this method has been changed: cancellationToken became optional parameter:

As result we got Method not found error in runtime. Interesting that app.config had binding redirect for Microsoft.Graph assembly but it didn't help in this case:

<dependentAssembly> <assemblyIdentity name="Microsoft.Graph" publicKeyToken="31bf3856ad364e35" culture="neutral" /> <bindingRedirect oldVersion="0.0.0.0-4.3.0.0" newVersion="4.3.0.0" /> </dependentAssembly>

Also interesting that the same code works properly in project which targets .NET Core 3.1.

For .Net Framework project workaround was to copy code of UnifiedGroupsUtility.ListUnifiedGroups() from PnP.Framework to our project (fortunately it is open source) and then call this copied version instead of version from PnP.Framework. When new version of PnP.Framework will be released which will use newer version of Microsoft.Graph we may revert this change back.

Thursday, July 22, 2021

Use Azure function as remote event receiver for Sharepoint Online list and debug it locally with ngrok

If you worked with Sharepoint on-prem you probably know what are event receivers: custom handlers which you may subscribe on different types of events. They are available for different levels (web, list, etc). In this article we will talk about list event receivers.

In Sharepoint Online we can't use old event receivers because they should be installed as farm solutions which are not available in SPO. Instead we have to use remote event receivers. The concept is very similar but instead of class and assembly names we should provide end point url where SPO will send HTTP POST request when event will happen.

Let's see how it works on practice. For event receiver end point I will use Azure function and will run it locally. For debugging it I will use ngrok tunneling (btw ngrok is great service which makes developers life much easier. If you are not familiar with it yet I hardly suggest you to do that :) ). But let's go step by step.

First of all we need to implement our Azure function:

[FunctionName("ItemUpdated")]

public static async Task<HttpResponseMessage> Run([HttpTrigger(AuthorizationLevel.Anonymous, "post", Route = null)]HttpRequestMessage req, TraceWriter log)

{

log.Info("Start ItemUpdated.Run");

var request = req.Content.ReadAsStringAsync().Result;

return req.CreateResponse(HttpStatusCode.OK);

}

It doesn't do anything except reading body payload as string - we will examine it later. Then we run it locally - by default it will use http://localhost:7071/api/ItemUpdated url.

Next step is to create ngrok tunnel so we will get public https end point which can be used by SPO. It is done by the following command:

ngrok http -host-header=localhost 7071

After this command you should see something like that:

Now everything is ready for attaching remote event receiver to our list. It can be done by using Add-PnPEventReceiver cmdlet from PnP.Powershell. Note however that beforehand is it important to connect to target site with Connect-PnPOnline with UseWebLogin parameter:

Connect-PnPOnline -Url https://{tenant}.sharepoint.com/sites/{url} -UseWebLogin

Without UseWebLogin remote event receiver won't be triggered. Here is the issue on github which explains why: RemoteEventReceivers are not fired when added via PnP.Powershell.

When ngrok is running we need to copy forwarding url: those which uses https and looks like https://{randomId}.ngrok.io (see image above). We will use this url when will attach event receiver to target list:

Connect-PnPOnline -Url https://mytenant.sharepoint.com/sites/Test -UseWebLogin

$list = Get-PnPList TestList

Add-PnPEventReceiver -List $list.Id -Name TestEventReceiver -Url https://{...}.ngrok.io/api/ItemUpdated -EventReceiverType ItemUpdated -Synchronization Synchronous

Here I attached remote event receiver to TestList on site Test and subscribed it to ItemUpdated event. For end point I specified url of our Azure function using ngrok host. Also I created it as synchronous event receiver so it will be triggered immediately when item got updated in the target list. If everything went Ok you should see your event receiver attached to the target list using Sharepoint Online Client Browser:

Note that ReceiverUrl property will contain ngrok end point url which we passed to Add-PnPEventReceiver.

Now all pieces are set and we may test our remote event receiver: go to Test list and try to edit list item there. After saving changes event receiver should be triggered immediately. If you will check body payload you will see that it contains information about properties which have been changed and id of list item which we just modified:

<s:Envelope xmlns:s="http://schemas.xmlsoap.org/soap/envelope/"> <s:Body> <ProcessEvent xmlns="http://schemas.microsoft.com/sharepoint/remoteapp/"> <properties xmlns:i="http://www.w3.org/2001/XMLSchema-instance"> <AppEventProperties i:nil="true"/> <ContextToken/> <CorrelationId>c462dd9f-604a-2000-ea96-f4bacc80aa84</CorrelationId> <CultureLCID>1033</CultureLCID> <EntityInstanceEventProperties i:nil="true"/> <ErrorCode/> <ErrorMessage/> <EventType>ItemUpdated</EventType> <ItemEventProperties> <AfterProperties xmlns:a="http://schemas.microsoft.com/2003/10/Serialization/Arrays"> <a:KeyValueOfstringanyType> <a:Key>TimesInUTC</a:Key> <a:Value i:type="b:string" xmlns:b="http://www.w3.org/2001/XMLSchema">TRUE</a:Value> </a:KeyValueOfstringanyType> <a:KeyValueOfstringanyType> <a:Key>Title</a:Key> <a:Value i:type="b:string" xmlns:b="http://www.w3.org/2001/XMLSchema">item01</a:Value> </a:KeyValueOfstringanyType> <a:KeyValueOfstringanyType> <a:Key>ContentTypeId</a:Key> <a:Value i:type="b:string" xmlns:b="http://www.w3.org/2001/XMLSchema">...</a:Value> </a:KeyValueOfstringanyType> </AfterProperties> <AfterUrl i:nil="true"/> <BeforeProperties xmlns:a="http://schemas.microsoft.com/2003/10/Serialization/Arrays"/> <BeforeUrl/> <CurrentUserId>6</CurrentUserId> <ExternalNotificationMessage i:nil="true"/> <IsBackgroundSave>false</IsBackgroundSave> <ListId>96c8d1c1-de22-47bf-9f70-aeeefa349856</ListId> <ListItemId>1</ListItemId> <ListTitle>TestList</ListTitle> <UserDisplayName>...</UserDisplayName> <UserLoginName>...</UserLoginName> <Versionless>false</Versionless> <WebUrl>https://mytenant.sharepoint.com/sites/Test</WebUrl> </ItemEventProperties> <ListEventProperties i:nil="true"/> <SecurityEventProperties i:nil="true"/> <UICultureLCID>1033</UICultureLCID> <WebEventProperties i:nil="true"/> </properties> </ProcessEvent> </s:Body> </s:Envelope>

This technique allows to use Azure function as remote event receiver and debug it locally. Hope it will help someone.

Update 2021-11-22: see also one strange problem about using of remote event receivers in SPO sites: Strange problem with remote event receivers not firing in Sharepoint Online sites which urls/titles ends with digits.

Monday, July 19, 2021

How to edit properties of SPFx web part using PnP.Framework

In my previous post I showed how to add SPFx web part on modern page using PnP.Framework (see How to add SPFx web part on modern page using PnP.Framework). In this post I will continue to familiarize readers of my blog with this topic and will show how to edit web part properties of SPFx web part using PnP.Framework.

For editing web part property we need to know 3 things:

- web part id

- property name

- property value

You may get web part id and property name from manifest of your web part. Having these values you may set SPFx web part property using the following code:

var ctx = ...;

var page = ctx.Web.LoadClientSidePage(pageName);

IPageWebPart webpart = null;

foreach (var control in page.Controls)

{

if (control is IPageWebPart && (control as IPageWebPart).WebPartId == webPartId)

{

webpart = control as IPageWebPart;

break;

}

}

if (webpart != null)

{

var propertiesObj = JsonConvert.DeserializeObject<JObject>(webpart.PropertiesJson);

propertiesObj[propertyName] = propertyValue;

webpart.PropertiesJson = propertiesObj.ToString();

page.Save();

page.Publish();

}

At first we get instance of modern page. Then find needed SPFx web part on the page using web part id. For found web part we deserialize its PropertiesJson property to JObject and set its property to passed value. After that we serialized it back to string and store to webPart.PropertiesJson property. Finally we save and publish parent page. After that our SPFx web part will have new property set.

Wednesday, July 14, 2021

How to add SPFx web part on modern page using PnP.Framework

If you migrated from OfficeDevPnP (SharePointPnPCoreOnline nuget package) to PnP.Framework you will need to rewrite code which adds SPFx web parts on modern pages. Old code which used OfficeDevPnP looked like that:

var page = ctx.Web.LoadClientSidePage(pageName); var groupInfoComponent = new ClientSideComponent(); groupInfoComponent.Id = webPartId; groupInfoComponent.Manifest = webPartManifest; page.AddSection(CanvasSectionTemplate.OneColumn, 1); var groupInfiWP = new ClientSideWebPart(groupInfoComponent); page.AddControl(groupInfiWP, page.Sections[page.Sections.Count - 1].Columns[0]); page.Save(); page.Publish();

But it won't compile with PnP.Framework because ClientSideWebPart became IPageWebPart. Also ClientSideComponent means something different and doesn't have the same properties. In order to add SPFx web part to the modern page with PnP.Framework the following code can be used:

var page = ctx.Web.LoadClientSidePage(pageName); var groupInfoComponent = page.AvailablePageComponents().FirstOrDefault(c => string.Compare(c.Id, webPartId, true) == 0); var groupInfoWP = page.NewWebPart(groupInfoComponent); page.AddSection(CanvasSectionTemplate.OneColumn, 1); page.AddControl(groupInfoWP, page.Sections[page.Sections.Count - 1].Columns[0]); page.Save(); page.Publish();

Here we first get web part reference from page.AvailablePageComponents() and then create new web part using page.NewWebPart() method call. After that we add web part on a page using page.AddControl() as before. Hope it will help someone.

Monday, July 12, 2021

Use assemblies aliases when perform migration from SharePointPnPCoreOnline to PnP.Framework

Some time ago PnP team announced that SharePointPnPCoreOnline nuget package became retired and we should use PnP.Framework now. Latest available version of SharePointPnPCoreOnline is 3.28.2012 and it won't be developed further. Based on that recommendation we performed migration from SharePointPnPCoreOnline to PnP.Framework. During migration there were several interesting issues and I'm going to write series of posts about these issues.

One of the issue was that one class SharePointPnP.IdentityModel.Extensions.S2S.Protocols.OAuth2.OAuth2AccessTokenResponse was defined in 2 different assemblies in the same namespace:

- SharePointPnP.IdentityModel.Extensions.dll

- PnP.Framework

Compiler couldn't resolve this ambiguity and showed an error:

Since class name is the same and namespace is the same - in order to resolve this issue we need to use quite rarely used technique called extern aliases. At first in VS on referenced assembly's properties window we need to specify assembly alias. By default all referenced assemblies have "global" alias - so we need to change it on custom one:

Then in cs file which has ambiguous class name on the top of the file we need to define our alias and then add "using" with this alias:

SharePointPnPIdentityModelExtensions; ... using SharePointPnPIdentityModelExtensions::SharePointPnP.IdentityModel.Extensions.S2S.Tokens;

After these steps compilation error has gone and solution has been successfully built.

Thursday, July 8, 2021

Get Sql Server database file's max size limit with db_reader permissions

Some time ago I wrote how to get current db size in Sql Server with limited db_reader permissions (see Calculate Sql Server database size having only db_reader permissions on target database). In this post I will show how you can also get max size limit of db file also having only db_reader permissions.

As you probably know in Sql Server we may set limit on db file size (and on db transaction log file size) using the following commands (in example below we limit both files sizes to 100Mb):

ALTER DATABASE {db}

MODIFY FILE (NAME = {filename}, MAXSIZE = 100MB);

GO

ALTER DATABASE {db}

MODIFY FILE (NAME = [{filename}.Log], MAXSIZE = 100MB);

GO

As result if we will check Database properties > Files - we will see these limits on both files:

In order to get these limits programmatically using db_reader permissions we should use another system stored procedure sp_helpdb and provider database name as parameter:

exec sp_helpdb N'{databaseName}'

This stored procedure returns 2 result sets. In 2nd result set it returns field maxsize which returns max size limit for db file. Here is the code which reads maxsize field from result of sp_helpdb proc:

using (var connection = new SqlConnection(connectionString))

{

connection.Open();

using (var cmd = connection.CreateCommand())

{

cmd.CommandText = $"exec sp_helpdb N'{connection.Database}'";

using (var reader = cmd.ExecuteReader())

{

if (reader.NextResult())

{

if (reader.Read())

{

return reader["maxsize"] as string;

}

}

}

}

}

For database used in example above it will return string "100 Mb". Hope that it will help someone.

Tuesday, July 6, 2021

How to get specific subfolder from source control repository in Azure DevOps pipeline

Often in Azure DevOps pipeline we only need some specific subfolder instead of whole repository branch. If we will check "Get sources" task we will notice that there is no way to choose subfolder: we may only select project, repository and branch but there is no field for selecting specific folder:

However it is not that hard to achieve our goal. After "Get sources" we need to add "Delete files from" task:

and in this task enumerate all subfolders which are not needed:

As result all subfolders enumerated there will be deleted (in example above these are subfolder1, subfolder2 and subfolder3) from the root folder with copied sources before to continue pipeline execution. This trick will allow to get only specific subfolder from source control for Azure DevOps pipeline.

Thursday, July 1, 2021

Sharepoint MVP 2021

Today I've got email from MS that my MVP status has been renewaed. This is my 11th award and I'm very excited and proud that got recognized for my community contribution for the last year. This year was challenging and probably was most different from previous years. Pandemic added own corrections to our lives. We had to change our daily routines to adopt for the new normal. Many people went to online and start working remotely. From other side it added new requirements to digital tools which help people do their work in this not very easy time. Applications like MS Teams, Zoom, etc started to play important role in our lives and growing amount of users of these apps confirm that. In our work we pay more attention to scalability and performance nowadays which will allow to handle massive users grow. All of that was difficult and challenging but at the same time very interesting from professional point of view. I've got familiar with many new technologies from O365, Azure, Sharepoint Online world which help with these goals. And tried to share my experience with other developers in my technical blog, forums, webinars, etc. That's why I'm especially glad that my contribution was recognized by MS for this challenging year. Thank you and let's continue our discovery in IT world.

Monday, June 28, 2021

Use SQL LIKE operator in CosmosDB

One of known pain of developers who works with Azure table storage is lack of SQL LIKE operator which allows to perform queries with StartsWith, EndsWith or Contains conditions. There is another NoSQL database available in Azure - CosmosDB. It is not free (although Table storage is also not free but is very cheap) but with some notes it supports LIKE operator.

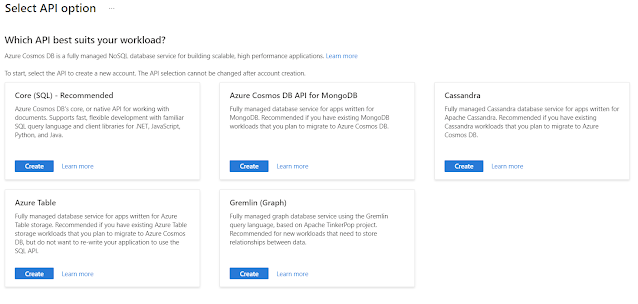

The first thing you should do when create new CosmosDB instance is to select underlying API:

At the moment of writing this post the following APIs were available:- Core (SQL)

- MongoDB

- Cassandra

- Azure table

- Gremlin

If you will create CosmosDB with Azure table API - it will look the same as native table storage and will have the same 6 operations available for queries: <, <=, >, >=, =, <>. I.e. no LIKE operator still:

But if you will create it with Core (SQL) API it will look different (it will work with "documents" instead of tables and rows):

and which is more important will allow to use SQL-like API and queries from Microsoft.Azure.Cosmos nuget package. E.g. if we have Groups table we may fetch groups which have some user as member using the following query (here for simplicity we assume that members are stored in JSON serialized string):

string userName = ...;

string sqlQueryText = $"SELECT * FROM c WHERE c.members like '%{userName}%'";

var queryDefinition = new QueryDefinition(sqlQueryText);

var queryResultSetIterator = this.container.GetItemQueryIterator<Group>(queryDefinition);

var items = new List<Group>();

while (queryResultSetIterator.HasMoreResults)

{

var currentResultSet = await queryResultSetIterator.ReadNextAsync();

foreach (var item in currentResultSet)

{

items.Add(item);

}

}

So if you need NoSQL database with support of LIKE operator consider using CosmosDB with Core (SQL) API.

Thursday, June 24, 2021

Compare performance of Azure CosmosDB (with Azure Table API) vs Table storage

As you probably know Table storage is basic NoSQL db option available in Azure. It is very cheap and used in many Azure components internally (e.g. in Azure functions or bots). From other side CosmosDB is powerful NoSQL db engine available in Azure which support several different APIs. You need to select this API when create CosmosDB instance and it can't be changed after that:

- Core (SQL)

- MondoDB

- Cassandra

- Azure Table

- Gremlin

I.e. if you have existing application which already uses Table storage - you may create CosmosDB with Azure Table API and switch to it without rewriting application code (although this is in theory. E.g. if you app targets .Net Framework you will most probably need to retarget it to .Net Standard or .Net Core. But this is topic of different post).

In our product we use Table storage and decided to compare performance of CosmosDB (with Azure table API) and native Table storage. For testing the following data was used:

- table with about 100K rows

- query against system PartitionKey field: PartitionKey eq {guid} (this is fast query itself because PartitionKey is indexed field)

- each query returned about 300 rows from overall 100K rows

- execution time was measured

Same test had been ran 10 times in order to get average execution time - for CosmosDB and Table storage separately. Here are results:

| Test run# | CosmosDB (msec) | Table storage (msec) |

| 1 | 3639 | 2825 |

| 2 | 3249 | 2008 |

| 3 | 3626 | 1613 |

| 4 | 3016 | 1813 |

| 5 | 2764 | 1942 |

| 6 | 2840 | 1713 |

| 7 | 4704 | 1776 |

| 8 | 2975 | 2036 |

| 9 | 3353 | 1808 |

| 10 | 2833 | 1699 |

| Avg (msec) | 3299.9 | 1923.3 |

I.e. average execution time for native Table storage was less than for CosmosDB with Azure Table API.

Friday, June 4, 2021

Issue with PnP JS sp.site.exists() call when page url contains hashes

Today we faced with interesting problem when tried to use sp.site.exists(url) method from PnP JS for determining whether site collection with specified url exists or not: all calls returned 403 with System.UnauthorizedAccessException:

During analysis I've found that it tried to make HTTP POST calls to the following url for all sites: https://{tenant}.sharepoint.com/sites/_api/contextinfo which is definitely not correct because https://{tenant}.sharepoint.com/sites is not correct url but managed path. Then we noticed that page where this code was executed contains #/ in url:

https://{tenant}.sharepoint.com/sites/mysitecol#/favorites

and it seems like PnP JS parses it incorrectly in this case. As workaround we checked which API is used inside sp.site.exists(url) call (/_api/SP.Site.Exists) and rewrote code with explicit API call instead:

let promise = context.spHttpClient.post(context.pageContext.site.absoluteUrl + "/_api/SP.Site.Exists",

SPHttpClient.configurations.v1, {

headers: {

"accept": "application/json;"

},

body: JSON.stringify({

url: url

})

}).then((response: SPHttpClientResponse) => {

response.json().then((exists: any) => {

if (exists.value) {

// site exists

} else {

// site doesn't exist

}

});

});

Hope that PnP JS will fix this issue at some point.

Update 2021-07-05: issue inside pnp js was fixed by adding initialization call:

sp.setup({

spfxContext: this.context

});

See this discussion for more details.

Wednesday, May 26, 2021

Latest Az 6.0.0 module conflicts with PnP.PowerShell 1.3.0

Today new Az 6.0.0 module was released (we wait for this release since it should contain fix for this issue: Webapp:Set-AzWebApp doesn't update app settings of App service). However it introduced own problem: it conflicts with PnP.PowerShell 1.3.0. It is important in each order these 2 modules are imported: if PnP.PowerShell is imported before Az then call to Connect-AzAccount will throw the following error:

Connect-AzAccount : InteractiveBrowserCredential authentication failed:

Method not found: 'Void Microsoft.Identity.Client.Extensions.Msal.MsalCacheHelper.RegisterCache(Microsoft.Identity.Client.ITokenCache)'.

+ Connect-AzAccount

+ ~~~~~~~~~~~~~~~~~

+ CategoryInfo : CloseError: (:) [Connect-AzAccount], AuthenticationFailedException

+ FullyQualifiedErrorId : Microsoft.Azure.Commands.Profile.ConnectAzureRmAccountCommand

Here is the minimal PowerShell code which allows to reproduce the issue:

Import-Module "PnP.PowerShell" -MinimumVersion "1.3.0" Import-Module "Az" -MinimumVersion "6.0.0" Connect-AzAccount

In order to avoid the error Az module should be imported before PnP.PowerShell:

Import-Module "Az" -MinimumVersion "6.0.0" Import-Module "PnP.PowerShell" -MinimumVersion "1.3.0" Connect-AzAccount

Tuesday, May 25, 2021

Camlex 5.3 and Camlex.Client 4.2 released: support for StorageTZ attribute for DateTime values

Today new versions of Camlex library (both for server side and CSOM) have been released: Camlex 5.3 and Camlex.Client 4.2. For client object model separate packages are available for Sharepoint online and on-premise:

| Package | Version | Description |

| Camlex.NET.dll | 5.3.0 | Server object model (on-prem) |

| Camlex.Client.dll | 4.2.0 | Client object model (SP online) |

| Camlex.Client.2013 | 4.2.0 | Client object model (SP 2013 on-prem) |

| Camlex.Client.2016 | 4.2.0 | Client object model (SP 2016 on-prem) |

| Camlex.Client.2019 | 4.2.0 | Client object model (SP 2019 on-prem) |

In this release possibility to specify StorageTZ attribute for DateTime values was added. Main credits for this release go to Ivan Russo who implemented basic part of the new feature. So now when you create CAML query for DateTime values you may pass "true" into IncludeTimeValue() method which then will add StorageTZ="True" attribute for Value tag:

var now = new DateTime(2021, 5, 18, 17, 31, 18); string caml = Camlex.Query().Where(x => (DateTime)x["Created"] > now.IncludeTimeValue(true)).ToString();

will generate the following CAML:

<Where>

<Gt>

<FieldRef Name="Created" />

<Value Type="DateTime" IncludeTimeValue="True" StorageTZ="True">2021-05-18T17:31:18Z</Value>

</Gt>

</Where>

Thank you for using Camlex and as usual, if you have idea for its further improvement you may post it here.

Thursday, May 20, 2021

One problem with bundling SPFx solution

If you run "gulp bundle" for your SPFx project and face with the following error:

No such file or directory "node_modules\@microsoft\gulp-core-build-sass\node_modules\node-sass\vendor"

Try to run the following command first:

node node_modules\@microsoft\gulp-core-build-sass\node_modules\node-sass\scripts\install.js

It will create "vendor" subfolder under "node_modules\@microsoft\gulp-core-build-sass\node_modules\node-sass" and will download necessary binaries there:

Downloading binary from https://github.com/sass/node-sass/releases/download/v4.12.0/win32-x64-64_binding.node Download complete Binary saved to ...\node_modules\@microsoft\gulp-core-build-sass\node_modules\node-sass\vendor\win32-x64-64\binding.node

After that "gulp bundle" command should work.

Tuesday, May 11, 2021

New task group is not visible in edit Azure DevOps pipeline window

If you have created new task group in Azure DevOps and want to add it to your pipeline you may face with the problem that this task group won't be visible in Add task window (I'm talking now about using visual designer when edit pipeline, not by edit yaml file). Even if you stop edit and edit pipeline again - it won't appear. In this case try to click Refresh link on the top - it will fetch latest results from DevOps and your task should appear after that. Note that you should search by task group name:

Wednesday, May 5, 2021

How to suppress warning "TenantId ... contains more than one active subscription. First one will be selected for further use" when use Connect-AzAccount

When you use Connect-AzAccount cmdlet from Az.Accounts module and there are several subscriptions in your tenant it will show the following warning:

"TenantId ... contains more than one active subscription. First one will be selected for further use. To select another subscription, use Set-AzContext"

If you want to handle this situation and give user possibility to explicitly select needed subscription then this warning may not be needed (as it may confuse end users). In order to suppress it use "-WarningAction Ignore" parameter:

Connect-AzAccount -WarningAction Ignore

In this case warning won't be shown:

You may use this technique for suppressing warning from other cmdlets too.

Friday, April 30, 2021

Provision Azure Storage table via ARM template

It is possible to provision Azure Storage table via New-AzStorageTable cmdlet. However it is also possible to provision it via ARM template and New-AzResourceGroupDeployment cmdlet. Last technique is quite powerful because allows to provision many different Azure resources in universal way. In order to provision Azure Storage table via ARM template use the following template:

"resources": [

{

"type": "Microsoft.Storage/storageAccounts",

"name": "[parameters('storageAccountName')]",

"apiVersion": "2019-04-01",

"kind": "StorageV2",

"location": "[parameters('location')]",

"sku": {

"name": "Standard_LRS"

},

"properties": {

"supportsHttpsTrafficOnly": true

}

},

{

"name": "[concat(parameters('storageAccountName'),'/default/','Test')]",

"type": "Microsoft.Storage/storageAccounts/tableServices/tables",

"apiVersion": "2019-06-01",

"dependsOn": [

"[resourceId('Microsoft.Storage/storageAccounts', parameters('storageAccountName'))]"

]

},

In this example we provision both Azure storage and then table Test in this Azure storage. It is also possible to provision only table - in this case use only second part of template.

Monday, April 26, 2021

Calculate Azure AD groups count via MS Graph in PowerShell

If you need to fetch Azure AD groups or e.g. calculate total count of AAD groups via MS Graph API in PowerShell you may use Powershell-MicrosoftGraph project on github. At first you need to clone repository locally and copy it's folder to local PowerShell Modules folder:

git clone 'https://github.com/Freakling/Powershell-MicrosoftGraph'

Copy-item -Path "Powershell-MicrosoftGraph\MicrosoftGraph\" -Destination ($env:PSModulePath.Split(';')[-1]) -recurse -force

We will make Graph requests using app permissions. It means that you need to have registered AAD app with permissions Groups.Read.All for fetching the groups:

Copy clientId and clientSecret of this AAD app and tenantId of your tenant (you may copy it from Azure portal > Azure AD overview tab). Having all this data in place run the following script:

$appID = "..." $appSecret = "..." $tenantID = "..." $credential = New-Object System.Management.Automation.PSCredential($appID,(ConvertTo-SecureString $appSecret -AsPlainText -Force)) $token = Get-MSGraphAuthToken -credential $credential -tenantID $tenantID (Invoke-MSGraphQuery -URI 'https://graph.microsoft.com/v1.0/groups' -token $token -recursive -tokenrefresh -credential $credential -tenantID $tenantID | select -ExpandProperty Value | measure).Count

It will output total count of groups in your AAD.

Thursday, April 22, 2021

Change "Allow public client flows" property of Azure AD apps via PowerShell

Some time ago I wrote about several problems related with changing of "Allow public client flows" property of Azure AD apps (on the moment when this post has been written this property was called in UI differently "Default client type > Treat application as public client". Nowadays it is called "Allow public client flows"): Several problems when use Set-AzureADApplication cmdlet with AzureAD app with allowPublicClient = true.

The problem was that it was not possible to change this setting from PowerShell script via Set-AzureADApplication cmdlet. However it is still possible to change it from script (solution was found by Piotr Satka so all credits go to him) - you need to use another cmdlets Get-AzureADMSApplication and Set-AzureADMSApplication. Here is the sample:

$azureAdMsApps = Get-AzureADMSApplication

$azureAdMsApp = $azureAdMsApps | Where-Object { $_.AppId -eq $appId }

Set-AzureADMSApplication -ObjectId $azureAdMsApp.Id -IsFallbackPublicClient $value | Out-Null

Using this code you will be able to change "Allow public client flows" property for Azure AD apps via PowerShell.

Friday, April 9, 2021

How to test certificate-based authentication in Azure functions for Sharepoint Online on local PC

If you develop Azure function you probably often run them locally on dev PC rather than in Azure. It simplifies debugging and development. In this post I will show how to test certificate-based authentication for Sharepoint Online in Azure functions running locally. First of all we need to register AAD app in Azure portal and grant it Sharepoint permissions:

Don't forget to grant Admin consent after adding permissions.

After that generate self-signed certificate using Create-SelfSignedCertificate.ps1 script from here: Granting access via Azure AD App-Only:

.\Create-SelfSignedCertificate.ps1 -CommonName "MyCertificate" -StartDate 2021-04-08 -EndDate 2031-04-09

It will generate 2 files:

- private key: .pfx

- public key: .cer

Go to registered AAD app > Certificates & secrets > Certificates > Upload certificate and upload generated .cer file. After upload copy certificate thumbprint - it will be needed for Azure functions below.

In Azure function certificate-based authentication for Sharepoint Online can be done by the following code (using OfficeDevPnP.Core):

using (var authMngr = new OfficeDevPnP.Core.AuthenticationManager())

{

using (var ctx = authMngr.GetAzureADAppOnlyAuthenticatedContext(siteUrl, clientId, tenant, StoreName.My, StoreLocation.CurrentUser, certificateThumbprint))

{

...

}

}

Here we specified clientId of our AAD app, copied certificate thumbprint and tenant in the form {tenant}.onmicrosoft.com.

Before to run it we need to perform one extra step: install certificate to local PC certificates store. It can be done by double click on .pfx file. After that Windows will open Certificate import wizard:

Since our code is using Personal store use Store Location = Current User. Then specify password and import your certificate to the store. You may check that certificate is installed properly by opening MMC console > Add/Remove snapin > Certificates. Imported certificate should appear under Personal > Certificates:

After that you will be able to run Azure functions locally which communicate with Sharepoint Online using certificate-based authentication.

Tuesday, March 30, 2021

Create new site collections in Sharepoint Online using app-only permissions with certificate-based authentication (without appinv.aspx and client secret)

In the past if we needed to create site collections in Sharepoint Online we registered new SP app on appregnew.aspx page and granted Full control permissions on tenant level using appinv.aspx (alternatively instead of appregnew.aspx we could register new AAD app in Azure portal and then use it's clientId on appinv.aspx). But nowadays it is not preferred way to achieve this goal. In this article I will show ho to create new site collections using app-only permissions with certificate-based authentication (without appinv.aspx and client secret).

First of all we need to register new AAD app in Azure portal and grant Sites.FullControl.All API permissions (after granting permissions admin consent will be also needed):

Now for testing purposes let's try to create Modern Communication site using clientId and clientSecret:

using (var ctx = new OfficeDevPnP.Core.AuthenticationManager().GetAppOnlyAuthenticatedContext("https://{tenant}-admin.sharepoint.com", "{clientId}", "{clientSecret}"))

{

ctx.RequestTimeout = Timeout.Infinite;

var tenant = new Tenant(ctx);

var properties = new SiteCreationProperties

{

Url = "https://{tenant}.sharepoint.com/sites/{siteUrl}",

Lcid = 1033,

TimeZoneId = 59,

Owner = "{username}@{tenant}.onmicrosoft.com",

Title = "{siteTitle}",

Template = "SITEPAGEPUBLISHING#0",

StorageMaximumLevel = 0,

StorageWarningLevel = 0

};

var op = tenant.CreateSite(properties);

ctx.Load(tenant);

ctx.Load(op);

ctx.ExecuteQueryRetry();

ctx.Load(op, i => i.IsComplete);

ctx.ExecuteQueryRetry();

while (!op.IsComplete)

{

Thread.Sleep(30000);

op.RefreshLoad();

ctx.ExecuteQueryRetry();

Console.Write(".");

}

Console.WriteLine("Site is created");

}

If we will try to run this code we will get Microsoft.SharePoint.Client.ServerUnauthorizedAccessException:

Access denied. You do not have permission to perform this action or access this resource.

Now let's try to create self-signed certificate like described here: Granting access via Azure AD App-Only. Then upload public key (.cer file) to our AAD app:

After that let's change C# example shown above to use certificate private key (.pfx file) and password for authentication. Code for creating site collection will remain the same:

using (var ctx = new OfficeDevPnP.Core.AuthenticationManager().GetAzureADAppOnlyAuthenticatedContext(

"https://{tenant}-admin.sharepoint.com",

"{clientId}",

"{tenant}.onmicrosoft.com",

@"C:\{certFileName}.pfx",

"{certPassword}"))

{

ctx.RequestTimeout = Timeout.Infinite;

var tenant = new Tenant(ctx);

var properties = new SiteCreationProperties

{

Url = "https://{tenant}.sharepoint.com/sites/{siteUrl}",

Lcid = 1033,

TimeZoneId = 59,

Owner = "{username}@{tenant}.onmicrosoft.com",

Title = "{siteTitle}",

Template = "SITEPAGEPUBLISHING#0",

StorageMaximumLevel = 0,

StorageWarningLevel = 0

};

var op = tenant.CreateSite(properties);

ctx.Load(tenant);

ctx.Load(op);

ctx.ExecuteQueryRetry();

ctx.Load(op, i => i.IsComplete);

ctx.ExecuteQueryRetry();

while (!op.IsComplete)

{

Thread.Sleep(30000);

op.RefreshLoad();

ctx.ExecuteQueryRetry();

Console.Write(".");

}

Console.WriteLine("Site is created");

}

This code will work and site collection will be successfully created. This is how you may create site collections in Sharepoint Online using app-only permissions and certificate-based authentication.

Tuesday, March 16, 2021

Attach onClick handler on div dynamically in runtime using css class selector in React and Fluent UI

Sometimes in React we may need to attach javascript handler on html element using "old" way which closer to DOM manipulation which we did in pure javascript code. I.e. if you can't get component reference because of some reason and the only thing you have is css class of html element in the DOM you may still need to work with it via DOM manipulations.

As example we will use Search element from Fluent UI. It renders magnifier icon internally and it is quite hard to get component ref on it. Highlighted magnifier is rendered as div with "ms-SearchBox-iconContainer" css class:

Imagine that we want to attach onClick handler on magnifier icon so users will be able to click it and get search results. Here is how it can be achivied:

private _onClick(self: Search) {

// search logic

}

public componentDidMount() {

let node = document.querySelector(".ms-SearchBox-iconContainer");

if (node) {

node.addEventListener("click", e => this._onClick(this));

}

}

public componentWillUnmount() {

let node = document.querySelector(".ms-SearchBox-iconContainer");

if (node) {

node.removeEventListener("onClick", e => this._onClick(this));

}

}

So we add handler in componentDidMount and remove it componentWillUnmount. Inside these methods we use document.querySelector() for finding actual div to which we need to attach onClick handler. Note that we pass this as parameter of _onClick since this inside this method will point to div html element but not to search component itself.